In previous installments of this blog series, we’ve told you all about how our solutions help make sense of video quality and work collaboratively to improve it. This time around, we shift focus away from ourselves. Instead, we’ll share some general knowledge about one of the technologies we help make more observable: the MP4 container format.

What is MP4?

MP4 is a container format that allows video, audio and other related information to be bundled together in a single file. Among other things, it’s commonly used in streaming video services.

Is it the same as Mpeg-4?

Yes and no (mostly no). The name MP4 comes from the MPEG-4 standard, but the container format is just one part of the MPEG-4 standard. (Part 14 to be precise.)

The MPEG-4 standard also contains many other parts, including specifications for how to encode and decode the video part of the video – the moving pictures – before combining it with audio using MP4 or another container. This codec (specified in Part 10) is called AVC or H.264 but is often just referred to as MPEG-4 for optimal confusion.

Just as the MPEG-4 AVC encoded video can be encapsulated in a different container than MP4 (such as MPEG-2 Transport Stream, Matroska, or Ogg), the MP4 container can encapsulate video content encoded with other codecs – perhaps most notably HEVC, also known as H.265.

The rest of this text is about MP4, the container format specified in MPEG-4 Part 14, not the video codec defined in MPEG-4 Part 10. Confused yet? No? Good!

Where is it used and why?

MP4 files can be used for storing or streaming complete audiovisual content in a single file. Because it can easily be divided up into smaller pieces, it is widely used in streaming video services based on adaptive bitrate technologies such as Smooth Streaming, MPEG-DASH and HLS.

Apple’s HLS technology entered the game early and initially used MPEG-2 Transport Streams as the container format. This is the format used in IPTV, cable, terrestrial and satellite distribution. It works well but has some unnecessary overhead to make room for channel lineup information and other things that just aren’t applicable to streaming videos. Over time, HLS has evolved to also embrace MP4, but Transport Streams are still widely used.

Microsoft’s Smooth Streaming technology was based around MP4 from the start. It can use Transport Streams, but this is rarely if ever seen. MPEG-DASH, in an attempt to create one standard to encompass them all, also supports both MP4 and MPEG-2 Transport Streams, but MP4 has always been the de facto favorite.

History – from quicktime to an extension of iso bmff

The MP4 container format is largely based on the QuickTime file format. If that name sounds familiar, you may be as old as I am. If you’re not, kids, let me tell you a story about what Internet video was like in the mid-nineties.

A short, potato-quality clip would take hours or days to download (provided nobody picked up the phone in the meantime – don’t ask), and then you had to download a video player supporting the file format used – none of this magical built-in browser support. Often, that player was QuickTime, another Apple technology. And it’s this player’s native file format that was used as the basis for the standardized MP4 container.

Subsequently, the core parts of the MP4 container format were broken out into the ISO base media file format (ISO BMFF), with MP4 being redefined as an extension of the ISO BMFF specification.

How is it structured?

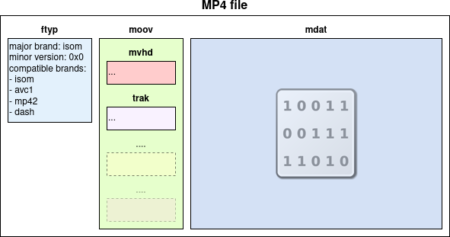

Conceptually, the format is organized into a series of boxes, which may, in turn, contain other boxes. Which types of boxes exist, what information they can contain, and where in the box hierarchy they can appear is clearly defined. Each box type has a four-character identifier.

At the top level, an MP4 file consists

- an ftyp (file type) box, identifying itself as an MP4 and providing additional compatibility information,

- a moov (movie) box, containing metadata organized in a nested structure of other boxes, and

- an mdat (media data) box, containing the audio/video payload.

Fragmented MP4

The ability to divide a movie or live stream into smaller pieces – called fragments, segments or chunks – is key in streaming applications. Rather than waiting for a full MP4 file to download, we want to download no more than a few seconds at a time, so that we can play and download at the same time.

As network conditions change, we also want to be able to seamlessly switch between different versions of the stream with higher or lower resolution or more or less compression. This is what makes adaptive bitrate streaming adaptive. The shorter the segments, the faster we can adapt.

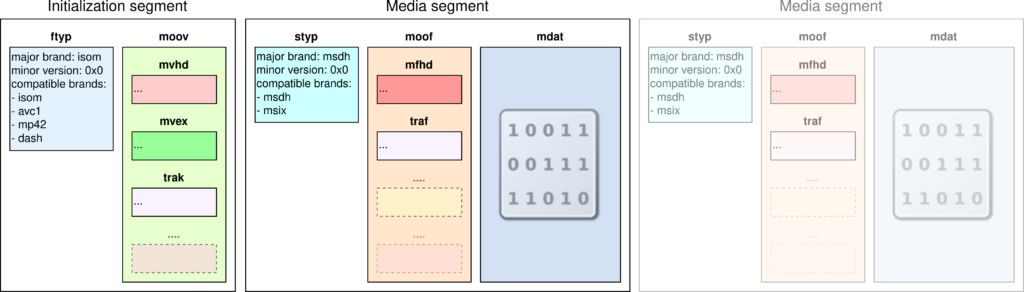

A fragmented MP4 stream consists of an initialization segment and a sequence of media segments. The initialization segment is similar to the start of an unfragmented file: it consists of an ftyp box and a moov box. The moov box contains additional information to indicate that the stream is fragmented, including an mvex (movie extends) box.

Media segments consist of a moof (movie fragment) box, containing metadata for just that fragment, and an mdat box, containing a portion of the audio/video payload. It may also have an styp box at the beginning, which is like ftyp but for a segment. In a properly encoded stream, each media segment can be decoded and played back without needing information from any previous segments other than the initialization segment.

What about encryption?

Because content providers and streaming services like to make money, encryption is typically used to control who can play a video file and how. This is also called Digital Rights Management (DRM).

The most common and standardized method of encryption for MP4 encapsulated content is called Common Encryption. When using Common Encryption, encryption is only applied to the mdat payload. The metadata remains unencrypted.

Information is also added to the metadata to signal that the content is encrypted and how. This information also includes IDs for the keys needed to decrypt the content.

How to use the key IDs to get the actual keys depends on the specific DRM solution used, but this is the only aspect that is vendor-specific. Which encryption algorithms to use and what to apply them to is all standardized.

What could possibly go wrong?

MPEG-2 Transport Streams need to carry a lot of checksums and counters because, in traditional IPTV distribution, they are sent using UDP, which is a best-effort protocol. Basically, a server fires a bunch of packets at your set-top box, and hopefully, they arrive intact and in order.

MP4 on the other hand needs fewer mechanisms of its own for verifying data integrity since HTTP-based streaming services use TCP, which has back-and-forth communication to ensure your packets arrive in good condition. Therefore, transport monitoring in Content Delivery Networks for streaming services is more about ensuring that content arrives in a timely fashion than checking for data corruption in transit.

But there are still plenty of things to verify at the MP4 container level – mainly issues introduced during packaging. This includes the structure of boxes as well as their content – and it goes beyond validating conformance to specifications.

To give just one example, fluctuations in the duration values of trun (track fragment run) boxes (which reside in the traf box inside the moof box of media segments) may technically be allowed, but they should typically not be expected in well-encoded content. If they do appear, it may be the result of encoder glitches resulting in uneven frame rates and visual artifacts, and it is one of many things to monitor for.

Of course, the Analyzer OTT can do that, but again, this isn’t a post about how fantastic our solutions are – even though they are.

About Alexander Nordström

Alex is a Product Manager and Solution Architect, he has been with Agama since 2009. He says the best aspect of this role is the diverse interactions with customers as well as all parts of the Agama team.