Streaming using adaptive bitrate

Adaptive bitrate (ABR) streaming came on the market in a big way during the last 10 years. Its main innovations compared to traditional IP delivery were:

- Using HTTP communication rather than UDP multicast – lowering the impact of individual packet loss events and supporting delivery over the Internet

- Having multiple bitrates of the video available, called profiles or renditions

As network conditions change, this enables a device or app to seamlessly switch between different versions of the stream with higher or lower resolution or more or less compression. This is what makes adaptive bitrate streaming adaptive. Every version of the stream is split into pieces of up to a few seconds in duration, which are called segments, fragments, or chunks.

The most impactful formats are HLS from Apple and MPEG-DASH, defined by the MPEG standards group.

Why was CMAF created?

Adaptive Bitrate Streaming as originally designed had several challenges.

The first one is latency. With a traditional HLS setup, the latency from the live signal can be as much as 30 to 60 seconds. This is problematic with content such as sports, where the viewer gets the action later than the neighbors.

A second challenge was fragmentation in the media formats used. HLS typically used Transport Stream segments, while DASH and Smooth Streaming opted for MP4. Supporting multiple formats meant that packaging must be duplicated with both TS and MP4. This leads to extra expenses in both packaging and CDN delivery. A way to handle this is just-in-time packaging where a single mezzanine format (say MP4) is encoded and then transmuxed to another container format such as TS on the fly. This lowers the encoding costs but not the CDN delivery costs.

In 2016, Apple added MP4 support to HLS in protocol version 7, and the same year, Microsoft and Apple jointly proposed a new standard based on MP4, called the Common Media Application Format. CMAF was officially published through MPEG in 2018 with support from HLS, Smooth Streaming and MPEG-DASH, thus allowing fragments to be reused across all technologies.

In parallel with this container format harmonization, there has also been a move towards standardizing the encryption of assets. CMAF is frequently used with Common Encryption, another harmonizing MPEG standard. This standard specifies how to encrypt the mdat payload and how to signal the encryption while allowing each DRM vendor to use its own method for retrieving the actual decryption keys. This way, the same fragments can be decrypted on multiple platforms with different DRM vendors.

How is it structured, and how does it enable lower latency?

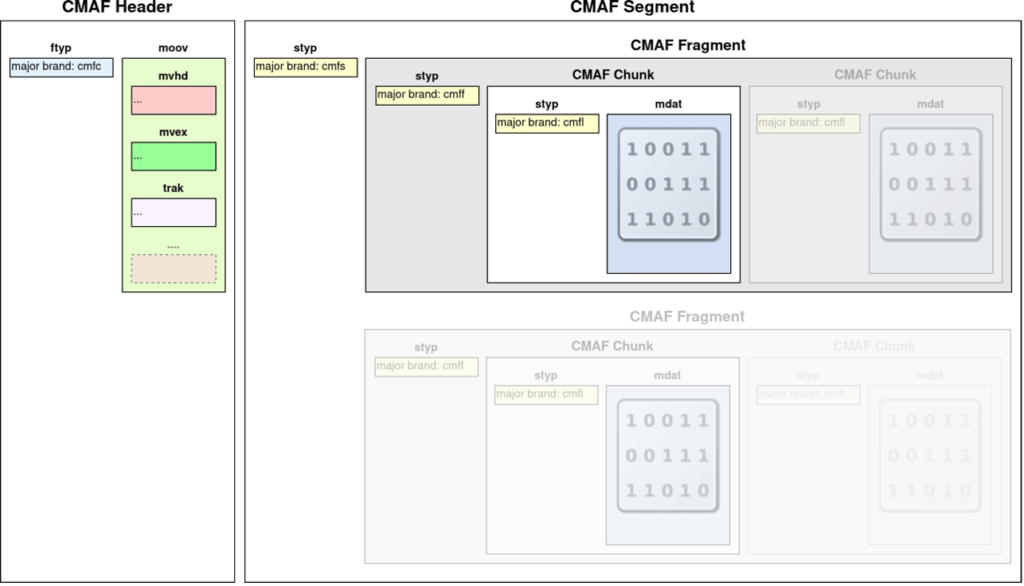

CMAF is based on the ISO Base Media File Format, which is a subset of the MP4 container format. The format uses the same concept of boxes as MP4, and many of the boxes used with traditional fragmented MP4 are still present in CMAF. A notable difference is that there is a hierarchy of smaller subdivisions:

The ability to divide a movie or live stream into smaller pieces is key in streaming applications. Rather than waiting for a full MP4 file to download, we want to download no more than a few seconds of content at a time, so that we can play and download at the same time.

But how does CMAF lower the latency from that baseline approach?

The key difference is what is called “Chunked encoding”. This means that a segment is internally subdivided into smaller units, called fragments and chunks. This allows a player to start decoding video without having to download the full segment (which could be between a few seconds to 10 seconds). For that to happen, the partial segment must be made available to the player via the CDN and origin. That part of the infrastructure must support the continuous transfer of the segments and fragments as the individual chunks are added to it.

Summary

CMAF enables both lower latency and more efficient use of resources, making it an important step towards a broadcast-like experience delivered over the top.

Additional technologies are also being developed to further improve latency numbers, for instance HESP, and the Low Latency HLS technology from Apple.

About Alexander Nordström

Alex is a Product Manager and Solution Architect, he has been with Agama since 2009. He says the best aspect of this role is the diverse interactions with customers as well as all parts of the Agama team.